So your website just went viral. Congrats! Now you have a new set of problems: How do you scale your application and services to meet your growing demand? How do you manage multiple Linodes all participating as a single system? How do you monitor the health of those Linodes, and take them in and out of rotation automatically? How do you coordinate deployment of new codebases without disrupting existing sessions?

So your website just went viral. Congrats! Now you have a new set of problems: How do you scale your application and services to meet your growing demand? How do you manage multiple Linodes all participating as a single system? How do you monitor the health of those Linodes, and take them in and out of rotation automatically? How do you coordinate deployment of new codebases without disrupting existing sessions?

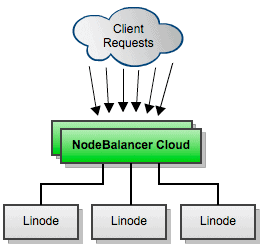

NodeBalancer is a managed load-balancer-as-a-service (lbaas), built upon a high-available cloud cluster architecture. Placing a NodeBalancer in front of your Linode cloud servers allows you to scale your applications and service horizontally and also adds conveniences like being able to perform rolling upgrades across your fleet without affecting existing sessions.

A NodeBalancer is $19.95/mo and can balance multiple ports at no additional cost. Public network transfer is deducted from your existing Transfer Pool. For complete details, please see

You’ll notice a new ‘NodeBalancer’ tab in the Linode Manager where you can manage your NodeBalancers. Enjoy!

-Chris

Comments (46)

You just killed possibility of we moving to Amazon EC2 in near feature. 😀

Love linode again!

This is a great addition to the set of features available natively in Linode. Will give this a try.

Service looks good, price looks good, documentation looks good!

i wish i didnt use mssql and coldfusion on my website. damn linode really needs to think about winodes 🙂

anyway…great job. You guys will rule the world some day.

Congrats, a good (and necessary) move on your side, good to see Linode moving further forward.

Just when we were thinking of writing our own, you drop this in our laps 🙂

Love all the way guys!

Any plans on supporting TLS/SSL termination?

Another great service, keep em coming guys!

Thanks, guys, great job! It’s really nice to have features like this taken care of, not having to build our own HAproxy/Varnish servers. I’m sure the overall utilisation of the infrastructure is much better.

Keep up the good work!

Kristof

Ps: Can’t wait to have IPv6 in Europe, too! 🙂

Chris ~

Would this ever work with SSL?

Does it do HTTP caching too? If not, you should consider adding an option to.

Very interesting!

How exactly is the NodeBalancer implemented? Is it a software-based load-balancer (like ELB) or is it hardware accelerated (using something like F5 or Zeus load-balancers)?

@Scott, not likely. Better to spread the SSL load out across the backends than to create a bottleneck at the NodeBalancer level.

@Preston, no it does not.

@Nikhil it’s implemented in software which runs on hardware 🙂

Service looks good, price looks good, documentation looks good!

+1 !

I have to say that you guys rocks!

Again!!!

You guys have got to be the best host I’ve ever come across and for reasons like this you are the only host I recommend. I look forward to many more years of hosting with you.

Thank you for this one! You guys rocks! The bill I have here on Linode is the only bill I’m very willing to pay for. Thanks again!

Great news, congrats!

What is the load balancer, and how does it ensure high availability? Is this entirely custom? Or are you using, Zeus, F5, HAProx, nginx? Heartbeat for h/a?

Thanks!

The million dollar question: Do the NodeBalancers support IPv6?

@Jon, for your million dollar question read the one cent answer 😉

NodeBalancer page -> Features -> blablabla -> Native IPv6 enabled

Now I really like Linode from quality perspective but one thing that really bothers me is the non availability of nodes and/or servers in asia(singapore). I will wait until Linode comes to Asia.

damn fine fare .. Tweeted.

That’s really great!! Do you have any plan for managed services as discussed in linode forum??

It’s what I need!

Hope I didn’t miss the answer somewhere else…but what happens if my NodeBalancer goes down?

Hey Chad!

NodeBalancer was designed from the beginning for high availability and redundancy. In the unlikely event that your NodeBalancer fails, the Linode it is running on fails, or even the host that is running that Linode fails, another member of the cluster will pick it up transparently within seconds. We built the cluster intentionally on different circuits, switch legs, and so on for that very reason. There was even consideration of physical location within the facility when placing cluster nodes.

If all of your backends fail — which is a little bit different scenario — NodeBalancer will serve a 500-type response to your visitors (in HTTP mode; TCP behaves predictably).

@Jon: In IPv6-enabled facilities, NodeBalancer will terminate IPv6 and speak IPv4 to the backends. The hostname provided for your NodeBalancer will resolve to A and AAAA records, so a CNAME will work as you’d expect for everyone.

@Jed Awesome, that is exactly what I wanted to know. Great implementation and I look forward to using it!

Disappointed not to see SSL endpoint support. Advantage EC2. Granted linodes have more CPU, but if I want affinity by cookie only the SSL endpoint can see that.

Really appreciate the effort going in to the NodeBalancers! There is a lot that the NodeBalancers do right, and I recommend that everybody tries them out. If we didn’t already have load balancing implemented, this is definitely a good starting point.

They are a great start and what they offer is very easy to configure. There are some key features/functions that really prevent us from using them in production and the reason we are continuing to utilize nginx for load balance our stack.

1) HTTPS/SSL, while “supported” we truly need all monitoring features offered in http. In our configuration it would be nice to at least bind/group our HTTPS to fail at the same time our HTTP does. Assuming other people use the same HTTPS and HTTP servers like we do, this would at least let HTTP monitoring direct our HTTPS/SSL pool.

2) Longer timeouts before giving up, maybe a retry count. With our nginx load balancing configuration we don’t seem to get the gateway error messages that quickly. While this one isn’t a deal breaker it sure was more frustrating to deal with and seemed a bit inconsistent. This may be a config issue on our part that is causing this.

3) Cross datacenter failover. I understand why you may choose not to load balance across two datacenters, but at least be able to link two NodeBalancers together to act as failover. At a very minimum being able to place external ip’s in the pool with a lower weight. This is the fundamental function that prevents us from utilizing this service.

All in all, this product just launched and offers a lot for a first revision. There are only three issues that we encountered. Once the above can be addressed, we will be eager to test them out to be used in our stack!

Will there be an option for balancing Nodes across Datacentres in future? I think this would be of a big benefit in HA situations.

Cheers

With our own implementation of HAProxy or what not, we know how many connections can be served concurrently based on the hardware specs.

Does Linode have data on how many concurrent connections each NodeBalancer can server?

Thanks

Freek’n sweet!

One step closer to be a great cloud hosting the next step can be Cloud Storage?

@Allen: We limit concurrent connections to such a high figure that you are very unlikely to hit it. In performance testing during development I surprised myself with the figures that were achieved. That is all monitored, too, and provisioning and capacity planning are watched closely.

@klappy: Point by point:

1) We talked at length about SSL termination during development. We have technical questions about it, and it complicates things a bit in our architecture; we are listening to this feedback, though.

2) We fixed an issue at the end of last week that might have caused these. You should give it another try, and perhaps we can diagnose it together if that didn’t resolve it.

3) Based on personal experience I’d advise DNS for this.

Thanks for the feedback, and we’re definitely listening. Every tweet, every blog comment, every forum post, every ticket — we do read them and consider the feedback. (Actually my morning ritual, now, to Cmd+F the tickets page for “NodeBalancer”.)

Definitely you guys Rock, you are always moving forward with no evil! Hope you are not bought by a Big greedy company!!

Cheers!

Is there any replication between the back end linodes?

In this setup, the ideal will be 2+ web server back end linodes + one db server and obviously the nodebalance, is that correct?

Thanks!

@Jed Thanks! I’ll give it another shot soon. After my review, I thought I would give AWS a chance at loadbalancing to help get another perspective.

With SSL, aws handles it as a group as mentioned. This made it quite easy to use and consistent with non-ssl requests.

Thanks for fixing the timeout issues, I’ll let you know if I encounter any more.

We do implement DNS for failover and even loadbalancing between datacenters. Even with the slimmest tts of 30s, a client can be hosed for up to 2min as DNS failover resolves. With many ISPs ignoring 30s tts and caching for 5min, that’s too much downtime. That is why it is important to us to have loadbalancers that failover, since they know can act immediately. AWS has the same limitation and the reason that we use NGINX to handle this layer for us.

Thanks again for listening and working so hard to provide the most awesome cost effective service!

This might be a stupid question especially since I have not used NodeBalancer yet but,

if NodeBalance is a software level load balancer that runs on a VM for the same price (actually more since the 12/24 month do not give discounts?), why would I not simply get another node and toss up the same software on it myself (or any kind of load balancer software in general)?

@sejal a single VM isn’t clustered in a HA setup, so you’d need more than one VM, and NodeBalancers are managed for you (what’s your time worth?).

Looking forward to seeing a CDN and Filestorage solution from Linode next.

This would be a big money maker for Linode and a welcome addition from your customers.

With the Asian data center available now, you could do a decent (and hopefully low cost) CDN just with in your own data centers. With the extra revenue, you could open a couple extra data centers in strategic locations around the globe, thus improving the edges in the CDN.

Up to how many Mbit/s can one load balancer handle.

I have a very traffic intensive website that I’d like to migrate over to linode (from slicehost). On slicehost I’ve setup15 load balancer nodes (haproxy) at the front of other 20 nodes that serve the actual website.

I thought at first that a couple of load balancers would handle it but I found out the hard way that slicehost nodes can only handle up to 32Mbit/s, my site usually needs to handle traffic levels above 500Mbit/s.

I’d like to know what’s the limit on internet traffic for these load balancers, slicehost is forcing me to go to rackspace but the costs there are going to kill me with the kind of bandwidth I need to handle, you guys seem like the best option.

Angel – A NodeBalancer runs inside of a Linode container (in a clustered, high-availability, failover-ing, load balanced, etc, configuration). A single Linode, when unrestricted, can easily achieve the wire speed of its hosts connection into the access layer.

Depending on your workload, you may run up against the active-connections limit of 5000 per NodeBalancer. Of course, you can easily work around this buy adding more NodeBalancers and round-robin between them in DNS. You’d also benefit there from the aggregate performance of a few NodeBalancers being on different back-end containers, hosts, and switch links.

I would be _very_ surprised if you couldn’t achieve multi-Gbit throughputs with a small number of NodeBalancers (and the back-ends to service them).

Hope that helps!

-Chris

Is it possible to span across different datacenters using NodeBalancers? It would be great if the NodeBalancer can divert the user’s traffic to the nearest datacenter to reduce latency, and this could also be a method to have reliable fail-over if one datacenter goes down. Would this work?

So far, my experience with the Node Balancers is great. One issue now, though, is that an application I’m working on will soon need to run over SSL entirely and it would be nice to have full statistics and monitoring over the traffic (incl source ip) + session stickiness.

So, I was just wondering:

1) Any updates on the ideas about implementing SSL termination in Node Balancer?

2) How would a Linode 512 configured as balancer (or 2 in HA setup) differ from a Linode Node Balancer regarding performance and availability?

Can linode act as a failover rather then load distributor ?

Yes – a NodeBalancer does both – it will route incoming connections only to healthy backends.